Data modeling is the formalization and documentation of existing

processes and events that occur during application software design and

development. Data modeling techniques and tools capture and translate

complex system designs into easily understood representations of the

data flows and processes, creating a blueprint for construction and/or

re-engineering. Data modeling in software engineering is the process of

creating a data model for an information system by applying formal data

modeling techniques.

A data model can be thought of as a diagram

or flowchart that illustrates the relationships between data.

Well-documented models allow stake-holders to identify errors and make

changes before any programming code has been written.

Data

modelers often use multiple models to view the same data and ensure that

all processes, entities, relationships and data flows have been

identified. There are several different approaches to data modeling,

including:

- Conceptual Data Modeling - identifies the highest-level relationships between different entities.

- Enterprise Data Modeling - similar to conceptual data modeling, but addresses the unique requirements of a specific business.

- Logical Data Modeling - illustrates the specific entities, attributes and relationships involved in a business function. Serves as the basis for the creation of the physical data model.

- Physical Data Modeling - represents an application and database-specific implementation of a logical data model.

Data

modeling is a process used to define and analyze data requirements

needed to support the business processes within the scope of

corresponding information systems in organizations. Therefore, the

process of data modeling involves professional data modelers working

closely with business stakeholders, as well as potential users of the

information system. There are three different types of data models

produced while progressing from requirements to the actual database to

be used for the information system. The data requirements are initially

recorded as a conceptual data model which is essentially a set of

technology independent specifications about the data and is used to

discuss initial requirements with the business stakeholders. The

conceptual model is then translated into a logical data model, which

documents structures of the data that can be implemented in databases.

Implementation of one conceptual data model may require multiple logical

data models. The last step in data modeling is transforming the logical

data model to a physical data model that organizes the data into

tables, and accounts for access, performance and storage details.

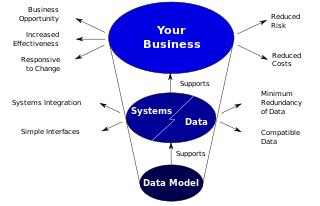

- How data models deliver benefit

The Benefits of Data Modeling

Abstraction

- The act of abstraction expresses a concept in its minimum, most universal set of properties. A well abstracted data model will be economical and flexible to maintain and enhance since it will utilize few symbols to represent a large body of design. If we can make a general design statement which is true for a broad class of situations, then we do not need to recode that point for each instance. We save repetitive labor; minimize multiple opportunities for human error; and enable broad scale, uniform change of behavior by making central changes to the abstract definition.

- In data modeling, strong methodologies and tools provide several powerful techniques which support abstraction. For example, a symbolic relationship between entities need not specify details of foreign keys since they are merely a function of their relationship. Entity sub-types enable the model to reflect real world hierarchies with minimum notation. Automatic resolution of many-to-many relationships into the appropriate tables allows the modeler to focus on business meaning and solutions rather than technical implementation.

Transparency

- Transparency is the property of being intuitively clear and understandable from any point of view. A good data model enables its designer to perceive truthfulness of design by presenting an understandable picture of inherently complex ideas. The data model can reveal inaccurate grouping of information (normalization of data items), incorrect relationships between objects (entities), and contrived attempts to force data into preconceived processing arrangements.

- It is not sufficient for a data model to exists merely as a single global diagram with all content smashed into little boxes. To provide transparency a data model needs to enable examination in several dimensions and views: diagrams by functional area and by related data structures; lists of data structures by type and groupings; context-bound explosions of details within abstract symbols; data based queries into the data describing the model.

Effectiveness

- An effective data model does the right job - the one for which it was commissioned - and does the job right - accurately, reliably, and economically. It is tuned to enable acceptable performance at an affordable operating cost.

- To generate an effective data model the tools and techniques must not only capture a sound conceptual design but also translate into a workable physical database schema. At that level a number of implementation issues (e.g., reducing insert and update times; minimizing joins on retrieval without limiting access; simplifying access with views; enforcing referential integrity) which are implicit or ignored at the conceptual level must be addressed.

- An effective data model is durable; that is it ensures that a system built on its foundation will meet unanticipated processing requirements for years to come. A durable data model is sufficiently complete that the system does not need constant reconstruction to accommodate new business requirements and processes.

- Furthermore, as additional data structures are defined over time, an effective data model is easily maintained and adapted because it reflects permanent truths about the underlying subjects rather than temporary techniques for dealing with those subjects.

Data Modeling Process

The

process of designing a database involves producing the previously

described three types of schemas - conceptual, logical, and physical.

The database design documented in these schemas are converted through a

Data Definition Language, which can then be used to generate a database.

A fully attributed data model contains detailed attributes

(descriptions) for every entity within it. The term "database design"

can describe many different parts of the design of an overall database

system. Principally, and most correctly, it can be thought of as the

logical design of the base data structures used to store the data. In

the relational model these are the tables and views. In an object

database the entities and relationships map directly to object classes

and named relationships. However, the term "database design" could also

be used to apply to the overall process of designing, not just the base

data structures, but also the forms and queries used as part of the

overall database application within the Database Management System or

DBMS.

In the process, system interfaces account for 25% to 70% of

the development and support costs of current systems. The primary reason

for this cost is that these systems do not share a common data model.

If data models are developed on a system by system basis, then not only

is the same analysis repeated in overlapping areas, but further analysis

must be performed to create the interfaces between them. Most systems

within an organization contain the same basic data, redeveloped for a

specific purpose. Therefore, an efficiently designed basic data model

can minimize rework with minimal modifications for the purposes of

different systems within the organization.

- Data modeling in the context of Business Process Integration

Types of Data Models

A

database model is a theory or specification describing how a database

is structured and used. Several such models have been suggested. Common

models include:

- Flat model: This may not strictly qualify as a data model. The flat (or table) model consists of a single, two-dimensional array of data elements, where all members of a given column are assumed to be similar values, and all members of a row are assumed to be related to one another.

- Hierarchical model: In this model data is organized into a tree-like structure, implying a single upward link in each record to describe the nesting, and a sort field to keep the records in a particular order in each same-level list.

- Network model: This model organizes data using two fundamental constructs, called records and sets. Records contain fields, and sets define one-to-many relationships between records: one owner, many members.

- Relational model: is a database model based on first-order predicate logic. Its core idea is to describe a database as a collection of predicates over a finite set of predicate variables, describing constraints on the possible values and combinations of values.

- Object-relational model: Similar to a relational database model, but objects, classes and inheritance are directly supported in database schemas and in the query language.

- Star schema is the simplest style of data warehouse schema. The star schema consists of a few "fact tables" (possibly only one, justifying the name) referencing any number of "dimension tables". The star schema is considered an important special case of the snowflake schema.

source: wikipedia

No comments:

Post a Comment